emnlp2022-ACA论文代码阅读

emnlp2022-ACA论文代码阅读

emnlp2022-ACA论文代码阅读

1. 入口引起的全局流程

以tacred数据集为例,首先,执行的脚本都在/bash/tacred/这个路径下,其中带_aca后缀的就代表使用了作者的方法,然后前缀的emar/re_cre代表作者这种model-agnostic方法使用的baseline;

以emar_aca.sh为例,在单卡GPU上运行,执行的是main/emar.py这个文件

1 | |

这里详解一下各个参数的意思

1.1 main/emar.py

从if __name__ == '__main__'开始看,首先使用get_config()函数,主要就是读取shell文件中配置的各个超参数

1 | |

因为是EMAR.py这个文件,那就首先拼接config.exp_name,这个之后将作为logs文件夹中记录的log

1 | |

如果不存在log_dir那个路径,则mkdir,这里logs是一个config中的默认路径./logs

1 | |

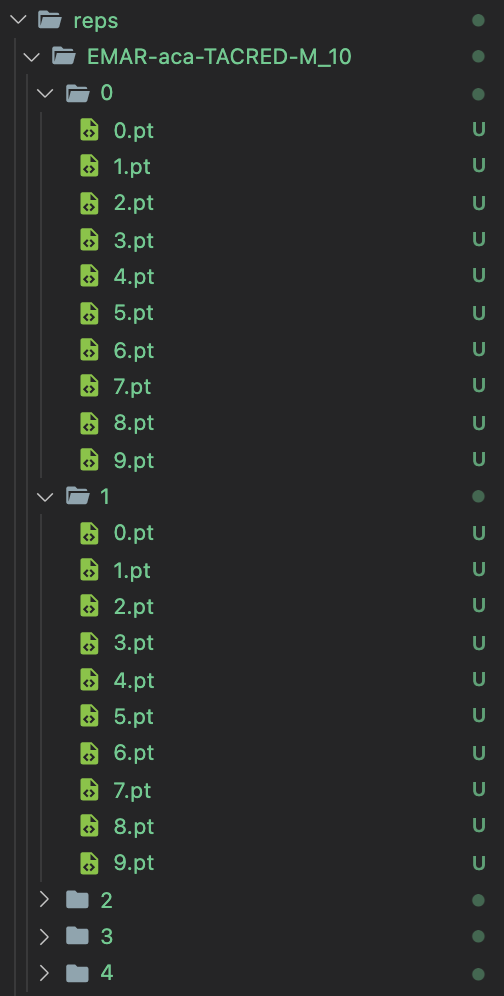

如果不存在这个reps目录下的路径则make,从后续跑出来的文件来说,这里像是一个模型的存储,每次跑出来存储一个pt文件,实验是跑5次(不同顺序的?) ,然后每个分成10个阶段的

设置logging

1 | |

设置tokenizer,并加入作者在论文中说的speical token

1 | |

设置record列表

1 | |

如果是fewrel数据集,要加载一个data/pid2name.json,fewrel数据集把每种关系编号为一个比如P126,然后这里有一个dict的对应

1 | |

P126,maintained by,负责保持主体(例如基础设施)正常运行的人员或组织

1.1.1 开启针对于total_round(5次实验)的循环

1 | |

1.1.1.1 设置基础的记录信息和保存信息,以及seed

首先设置基础的记录信息和保存信息,以及seed 这里这些都要设置吗?之前感觉只有几行

1 | |

1.1.1.2 设置data的sampler(采样器),在sampler.py文件中

1 | |

- 下面是这个init的定义,初始化

1 | |

- 读取关系的时候用的是下面这个函数

1 | |

config.relation_file配置的是下面这个文件data/id2rel_tacred.json,一个列表

1 | |

原来是通过id索引关系(id2rel,是一个list),现在制作一个反向的mapping通过关系索引id(rel2id,是一个dict)

_read_data的逻辑是下面这样的

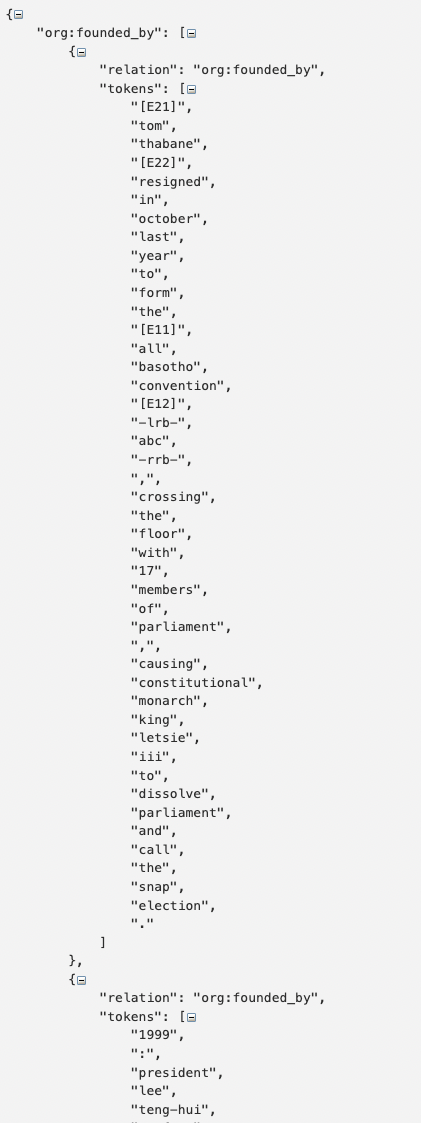

加载的数据文件是config.data_file,也就是data/data_with_marker_tacred.json,这个数据格式化看起来是这样的,作者按照这个关系,比如说org:founded_by进行第一层级的建模,然后是已经把tacred数据集进行与处理后了(所以可能可以公开发布出来吧),这里比如[E21]/[E22]这些special token就和论文里面说的一样

这里因为是按照关系来的,对于CRE任务来说不会出现串了的情况

1 | |

这里制作了dataset之后,会存成一个pt文件,下次用到的时候不用再制作了,直接读取pt文件就可以

- 重定义

data_sampler类的__iter__与__next__

1 | |

1.1.1.3 传出来id2rel, rel2id,然后初始化一个BertEncoder

1 | |

这里BertEncoder是一个整体的大结构,作者自己实现了换一下,不只是一个普通的层

1 | |

1.1.1.4 针对ACA的一些配置处理,并且建立proto_softmax_layer,设置memorized_samples字典memorized_samples = {}

1 | |

- 内部的这个模型(emar)的复现,这里大概来说一下,主要分为

base_model类还有proto_softmax_layer类

1 | |

这里的add_relation_num实在是不太理解,为什么会是12呢

对于fewrel应该是12种,(因为每次8个,8+4),那tacred会不会这里有bug?

1.1.2 开启对于sampler的循环(10个Task)

这里sampler之前已经处理好了,定义了是4个4个的这样的

1 | |

1.1.2.1 详解get_aca_data函数

1 | |

1.1.2.2 train_simple_model函数

1 | |

1.1.3 收尾(对齐1.1.1)

1 | |

2. 代码中阅读到的问题和重点记录

- 作者使用的是special token的方式,依次加入

[E11] [E12] [E21] [E22],bert的词表大小是30522,所以开始的编号是0-30511的,这几个token也就依次被定位为30522, 30523, 30524, 30525这样的; - 对于这些special token的加入,作者是在预处理数据的时候就完成了,数据预处理的时候是先用relation type对应到各个样例,这样relation type在json文件中是第一层,就可以让持续学习比较好处理这种文件;

- config文件和shell文件中的下面这几个参数,是针对fewrel数据集来用的,而TACRED数据集是定死的,训练集340,测试集40,没有验证集;

1 | |

- 作者在划分数据集的时候这里代码有一点bug,这里因为是<=的符号,所以最后出来的都是41个数据,也不知道是不是他对齐的工作都是这么操作的,这个从作者输出的confusion matrix中也能看出来

https://github.com/Wangpeiyi9979/ACA/blob/898238415202d4bd6b7b555660b82732ab03483e/sampler.py#L114

1 | |

1 | |

- 在实现ACA的方法的时候,作者写的还是和论文里差不多的,论文里说用第一种关系重建生成N//2种,用第二种反向生成N种,在代码里看起来这是最理想的情况,如果遇到一些特殊情况,或者反向的时候是那种对称的关系,那么就可能不到这个种类数目;

- 另外在代码这里涉及到一个model.incremental_learning,这个地方感觉不是很理解,他这个add_class的数目是3倍的

rel_per_task,那么对于TACRED每个task新进来4个关系的话,这个add_relation_num就变成12,加起来就是40+12=52种关系分类,但是每一次最多也就是40 + 4 + 2种关系的分类,这种在训练的过程里,是需要模型来学习这种关系吗?因为感觉模型的fc层,不是从4 8 12 16这样一直加上来的;;;另外这里也没理解这个with torch.no_grad()的作用;

1 | |

反向关系也直接定义成一种新的关系,像群里师兄说的,这个关系会不会没什么意义,而很多反向关系是可以在数据集中找到的,不知道这些数据集中有没有对反向关系的定义,如果我开始学习了B->A是一种反向新定义的没有含义的关系,但是B->A这个关系在之后训练出现了,那感觉就很容易分类错误?

那种拼接的方式也确实像师兄说的比较粗暴,看起来只是怎么取了5个词,强行给拼到一块上一样;

cur_acc就是在当前task的几个关系上的F1-Score,total_acc就是在当前+之前见过的所有关系上的F1-score,看起来作者主表格中挂的应该是total_acc

1 | |

一般来说,学习的顺序和采样的情况都会影响,作者就是很多随机策略,每次换一个seed这样的,5次实验,每次跑10个task,然后比如TACRED就是随机打乱后采样320个训练数据加40个测试数据;

看起来像是一个train_simple_model + train_model的学习范式,在train_simple_model的时候把aca的数据混进去,但好像后续就不需要了(对EMAR还没了解太多);